How Do You Feel About Your Scar

An Immersive Virtual Reality Meditation Experience Visualizing Scar Information by Using Brainwave Data

Designed and Developed by Wanyue (Luna) Wang

Tools: Unreal Engine, Oculus Rift, Python, Emotiv BCI, Emotiv 14-channel EEG Headset

Scars are reported to play a role in the development of individuals’ sense of self; in particular, individuals discuss how their scars represent an aspect of their identity and understanding of whom they have become. I designed an immersive virtual reality meditation experience that guides participants to recall their scar memory and transforms scar information into artwork by using mental states from EEG Signals (Brainwave). I hope this experience can help individuals rethink their scars and find the beauty of them.

Before moving the project to digital space, I did a series of experiments in physical space, check the details and reasonings here.

DEMO VIDEO

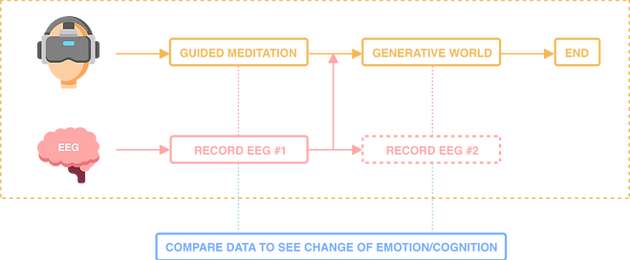

PROJECT FRAMEWORK

The project includes two phases: guided meditation and exploration of a generative world. During the meditation phase, the participant will hear and see instructions that guide him/her to think of his/her scars including related memory, the shape of the scar and feelings towards the scar. The participant does not need to say anything. The EEG data (#1) will be recorded in this phase and will be used to generate a virtual environment for the second phase. In the second phase, the participant explores the generated environment in virtual reality with soothing background music by moving his/her body or using the VR controllers. EEG data (#2) will also be recorded in the second phase and will be used to compare it with the data from the first phase. All EEG data will be recorded and saved to a local sheet anonymously.

I am curious about the impact of the immersive VR experience on participants’ cognition about their scars, and wonder whether the experience can positively influence their consciousness. I plan to compare the EEG data #1 and EEG data #2 to investigate the change of their brain activities and the change of mental states. The study involves human subjects recruited to participate in the experience, so I applied to the Institutional Review Board (IRB) for the data collecting and comparison part.

INTERACTION DESIGN

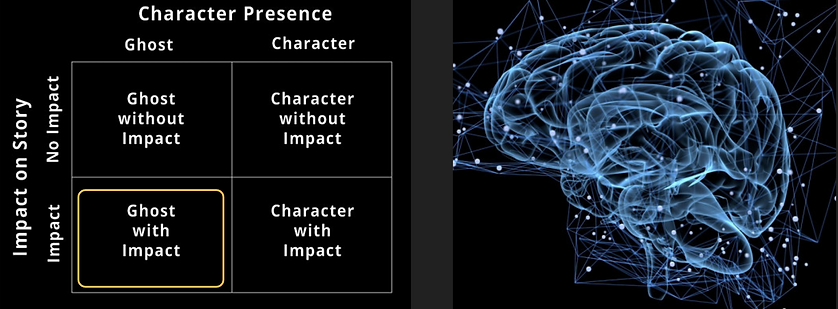

Virtual reality is often considered as “a specific narrative medium alongside other narrative forms such as Theatre, Literature or Cinema.” (Aylett and Louchart 2003) In the VR experience, users tell their scar stories in the meditation phase through the brain-computer interface (BCI) instead of using VR controllers. Their EEG data contributes to the outcome of the second phase where their stories are reiterated in an interactive and artistic way.

VISUAL DESIGN

Visual design is an important part of the experience. On the one hand, In the first phase of the experience, the visual style should provide a sense of peace and calm in order to help participants to concentrate on their scar. In other words, there should not be too many visual elements that may distract people in the meditation phase. On the other hand, the visual style of the level of generative art should be more dynamic and attractive, providing users a larger space to explore. These two stages have different requirements for the functionality of the visual style, but in order to ensure the consistency of the overall experience, the visual style needs to be unified.

I made a mood board of potential visual style inspirations, also take my previous experiment as reference.

AUDIO DESIGN

The most important thing for the audio design is making scripts well-structured and comprehensible. To test it, I added the audio of the meditation script draft to the VR meditation scene and briefly tested on four users to make sure that the description is clear enough and the interval between instructions are reasonable. I adjusted the soundtrack based on their feedback. The script is finalized after three versions of iteration.

Everyone’s scar experience is different, intervals between instructions may be short or long for some people. The script might be updated when I got more participants’ feedback.

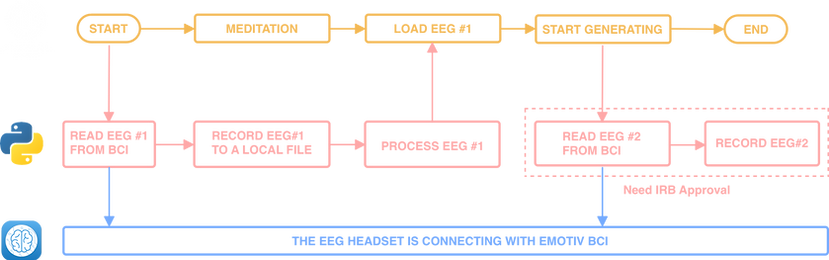

DEVELOPMENT FRAMEWORK

The hardware I used in the project is an EMOTIV EPOC+ 14-Channel EEG headset and an Oculus Rift VR headset. On the software side, I used Unreal Engine 4.23 for VR development, EMOTIV BCI for EEG headset calibration, and python for automatic EEG data collection and data processing. The development framework of the project is shown below.

The first step is using EMOTIV BCI to calibrate the EEG headset to ensure a higher connection quality. During the whole experience, the EEG headset is connecting with EMOTIV BCI. When the experience starts, the VR development tool, Unreal Engine, triggers python scripts to automatically collect EEG data from the headset by using WebSockets and Emotiv API and then stored as local files on the investigator's (my) computer. Once finishing the meditation, the Unreal Engine automatically imports EEG data from the local file and generates a virtual environment based on the EEG data.

DATA MAPPING

EMOTIV provides six types of mental state data including stress, engagement, interest, focus, excitement, relaxation. Among those dimensions, stress, engagement, interest, and relaxation data, which are more related to scar experience, is used to change the variables of material and texture thus generate a unique environment in the second phase. The way I mapped mental state data is shown below.

With the engagement level higher and the size of the object larger, the user will feel more immersive. The interest level decides the color of the object -- the higher the interest level the warmer the color, the lower the interest level the colder the color. The stress level determines the cloud object density -- higher stress level results in denser clouds, just like clouds in the real world, dense clouds always related to negative feelings. The offset of the object is determined by the degree of relaxation, which means that the more relaxed user is, the more dynamic the object is.

USER TESTING

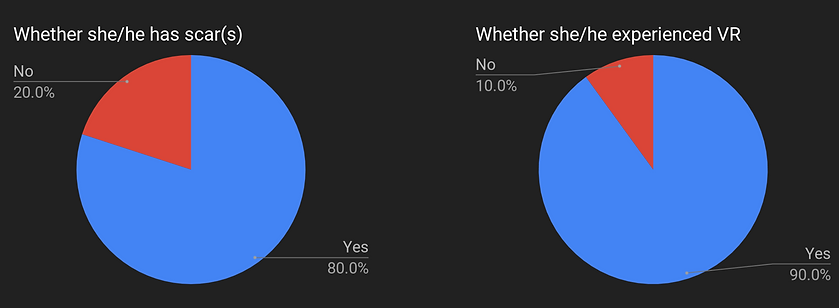

The purpose of this user testing round was to test the interaction design and the flow of the immersive experience. I invited 6 NYU graduate students and 4 of my friends that have scars to test the experience. Nine of them had previous experience in VR and they were all new users of EEG. I worked with one user at a time.

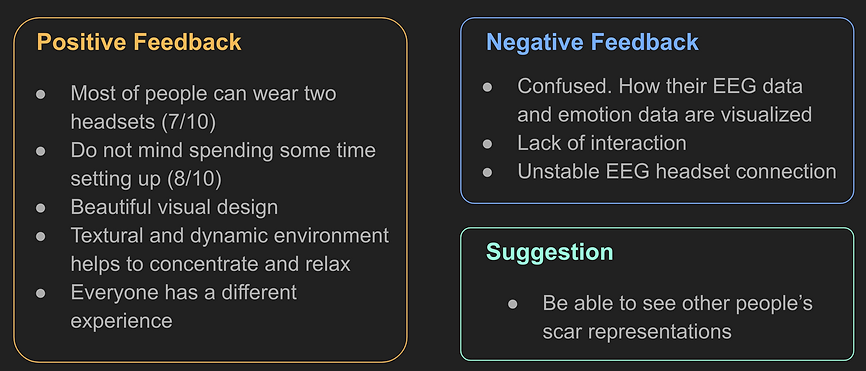

Among the 10 participants, 7 of them were able to finish the experience wearing both headsets, the others finished the two phases of the experience separately. In my observation, some of them were surprised when they experienced VR. They all liked the experience overall, their feedback toward the aforementioned questions are detailed below:

“Next time when I recall these memories, I will think of the experience and the clouds rather than just unpleasant feelings''

----- Daniel

ITERATION

Based on the user testing results and my own understanding, I iterated one more version.

-

Add a tutorial

-

Add a feature of taking high-resolution screenshot in VR

-

Change one cloud object to four

-

Interpret the EEG (emotion states)

However, it turns out four data visualization objects is a bad idea. Different mental states as a whole reflect the emotion at that moment, it should not be taken apart. Worse, four objects distract the audience, they might get more confused. I simply test the new visualization idea with 5 users, four of them confirmed the assumption. So I discarded the four objects version. To make the visualization idea more clear, I made a flyer to explain it and present participants the flyer before they start the VR experience.

GALLERY

PEOPLE IN THE EXPERIENCE

This is my Master's thesis project. Pictures above are all from user testing and exhibition. If you are interested in more details behind the project, please feel free to contact me.

Check my scar-related experiments in physical space here.

MOVING FORWARD

Extended the framework of the Scar VR to another scenario.